14/03/2023

Machine learning of mathematical models is found to have fundamental limitations

A study carried out by the URV’s SeesLab research group has shown for the first time that machine learning algorithms do not always succeed in finding interpretable models from data

A study carried out by the URV’s SeesLab research group has shown for the first time that machine learning algorithms do not always succeed in finding interpretable models from data

Machine learning is behind many daily decisions. The personalized advertisements that appear on the Internet, the recommendations of contacts and content on social networks, or estimates of the probability that a medicine or treatment will work in certain patients are just some examples. This branch of artificial intelligence develops models capable of processing large amounts of data, learning automatically and identifying complex patterns so that predictions can be made. But due to the complexity of these models and the number of parameters, when a machine learning algorithm malfunctions or detects erroneous behavior, it is often impossible to identify the reason. In fact, even when they work as expected, it is hard to understand why.

An alternative to these “black box” models, which are difficult or impossible to control, is to use machine learning to develop interpretable mathematical models. This alternative is increasingly acquiring greater importance among the scientific community. Now, however, a study published in the journal Nature Communications by a research team from the URV’s SEES Lab research group has confirmed that, in some cases, interpretable models cannot be identified on the basis of data alone.

Interpretable mathematical models are nothing new. For centuries and to this day, the scientific community has described natural phenomena using relatively simple mathematical models, such as Newton’s law of gravitation, for example. Sometimes these models were arrived at deductively, starting from fundamental considerations. But more frequently, the approach was inductive, from data. Currently, with the large amount of data available for any type of system, interpretable models can also be identified using machine learning.

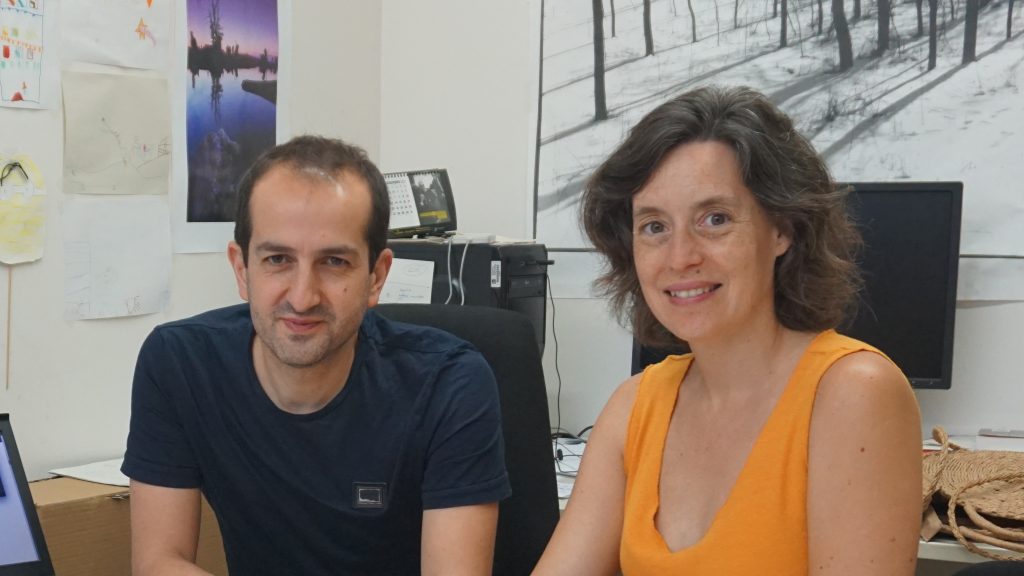

In fact, the same research team designed a “scientific robot” in 2020 (that is to say, an algorithm capable of automatically identifying mathematical models that, in addition to improving the reliability of its predictions, provides information so that data can be understood, just as a scientist would). Now, the group has taken one more step with its research. Marta Sales -Pardo, a professor in the URV’s Department of Chemical Engineering who has participated in the research, has used this “scientific robot” to demonstrate, that “sometimes it is not possible to determine the mathematical model that really governs a system’s behaviour.”

The importance of noise

All the data that can be obtained from a system contains “noise”; that is, it suffers from distortions or small fluctuations, which will be different every time it is measured. If the data has little noise, a scientific robot will identify a clear model that can be shown to be the correct model. But the greater the variability is, the more difficult it is to discover the correct model, as the algorithm may result in more than one model that could fit the data well. “When this happens, we talk about model uncertainty, since we cannot be sure which one is correct,” explains Roger Guimerà, ICREA researcher from the same research group.

In the face of this uncertainty, the key is to use a rigorous approach (Bayesian), which consists of using probability theory without approximations. “Our study confirms that there is a level of noise beyond which no mechanism will succeed in discovering the correct model. It is a question of probability theory: many models are equally good for the data, and we cannot know which is the correct one,” he concludes. For example, if thermometers that measure atmospheric temperature had reading errors of plus/minus 20 degrees Celsius, it would be impossible to develop good weather models, and a prediction model that said we are always within 10 degrees (plus/minus 20) would be as “good” as a more detailed model that predicts rises and falls in temperature.

The results of this study dismantle the idea that has been held until now that it is always possible to use data to find the mathematical model that describes them. It has now been shown that if you do not have enough data or if you have too much noise this will be impossible, even if the correct model is simple. “We have become aware of a fundamental limitation of machine learning: the data may not be sufficient for us to find out what is happening in a specific system”, concludes the researcher.

Bibliographical reference: Fundamental limits to learning closed-form mathematical models from data. Oscar Fajardo-Fontiveros, Ignasi Reichardt, Harry R. De Los Ríos, Jordi Duch, Marta Sales-Pardo & Roger Guimerà. Nature Communications volume 14, Article number: 1043 (2023). DOI: https://doi.org/10.1038/s41467-023-36657-z